‘Shift Left’ Responsible AI

What is Responsible AI?

(Part 1/2)

Written by :

Senior Consultant

Sapience Consulting

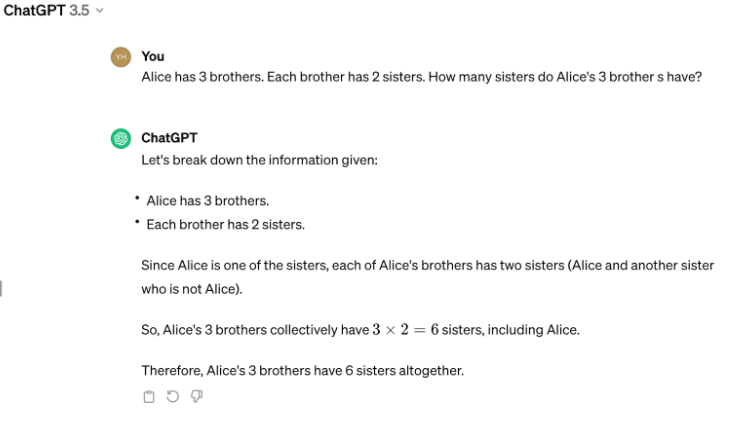

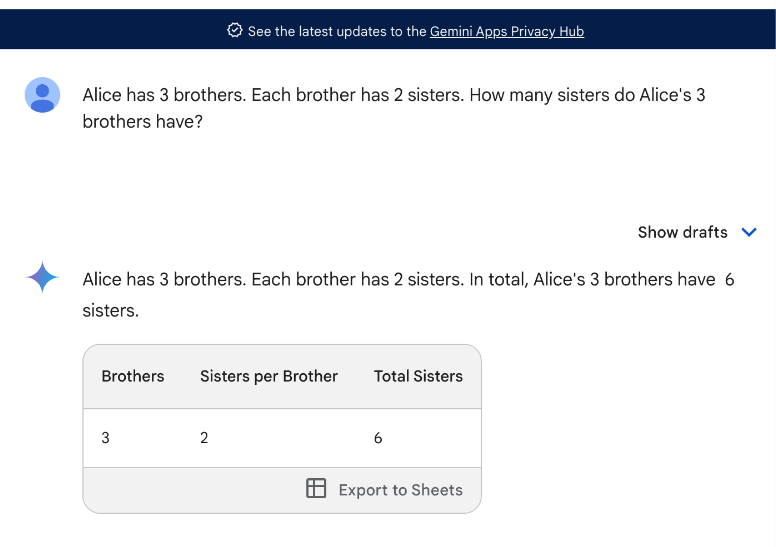

Alice has 3 brothers. Each brother has 2 sisters. How many sisters do Alice’s 3 brothers have?

– My favourite question that I have heard lately. Before you spill out the answer, let’s use AI (Artificial Intelligence) to help!

(I trust your answer is 2 – Alice + the other sister)

With this example, we shall exercise precaution while we embrace AI in an FOMO (Fear Of Missing Out) fashion.

What is Responsible AI?

Responsible AI is an approach to developing and deploying artificial intelligence (AI) from both an ethical and legal point of view. The commonalities between ‘ethical’ and ‘legal’ are:

1) both perspectives are subject to social and cultural differences.

2) the precise requirements are subject to interpretation.

Nevertheless, we can confidently say the legal bar is the minimum to fulfil as being compared with the ethical bar.

Among Microsoft, Google and AWS, ‘Responsible AI’ topic has been accompanying the adoption of AI practices. We can find six common themes that define the quality of ‘Responsible AI’ across the three big power players. Let’s apply AI to the use case of approving loan applications in financial institutes.

The qualities of Responsible AI

When it comes to using AI for loan approvals, there are six key aspects to consider for responsible implementation.

- Fairness / Inclusiveness

to ensure that the AI doesn’t discriminate based on factors like race, gender, religion, neighbourhood, age, … etc.

– Is the output (result) ‘fair’?

– How do we define fair and inclusive enough? - Explainability / Interpretability requires the ability to understand why a loan is rejected and how to improve future applications.

– Are we able to explain to the customers why their applications are rejected or the approved amounts are lower?

– Are we able to advise the customers how to improve their credit ratings? - Privacy / Security / Safety

are paramount, guaranteeing compliance with data protection laws and safeguarding personal information.

– Does the solution comply with the Personal Data Protection Act requirements?

– Are we able to demonstrate compliance?

– Is the solution protected against cyber threats? - Transparency

demands clear explanations of the AI model for regulators and stakeholders.

– Are we able to disclose necessary information to authorities, internance / external auditors, and relevant stakeholders? - Reliability / Robustness

is crucial, ensuring consistent and accurate loan decisions.

– Is the AI performing consistently?

– Can we be sure it is performing well accordingly at any time? - Accountability / Governance establishes who is responsible for AI decisions and how any potential issues will be addressed.

– How are the AI solution decisions made? e.g. when is it good enough to go live?

– What happens when incidents occur?

What is required

to practice Responsible AI?

To achieve the above qualities, we need a holistic framework.

The required elements are :

A. Governance

- Accountability

Define accountability, roles and responsibilities. - Policy

Embed organisational values and principles into enforceable policies and up-keep them. - Decision-Making

Frame decision-making mechanisms and prepare to evolve. Many questions asked in the earlier section (e.g. how do we define ‘fair’?) do not have definite answers and these answers evolve over time. - Resources

Ensure management support, budget and human resources are available. - Compliance

Integrate this topic with the overall check and balance framework. - Risk

Integrate this topic with the overall risk management framework.

B. People

While the technology behind AI is impressive, creating a truly responsible system requires a broader approach. We need a strong foundation of :

- Talent

Acquire and/or grow talent. - Awareness

Educate general employees and promote awareness. - Training

Provide on-going educational programs. - Continual Improvements

Make continual improvements as a mandate as the topic is evolving rapidly.

C. Process

- Identify

Integrate with existing incident and event management processes to detect events, handle alerts and report incidents. - Diagnose

Integrate with existing problem management processes and ensure adequate tools/practices are available to conduct in-depth analysis. - Response

Pay attention to potential major or security incidents and enhance internal/external communication processes. - Monitor

Complete the feedback loop, if any workarounds, fixes, or improvements are deployed, continually monitor the performance

D. Products

- Tools

Explore the tools for model evaluations, security controls/safeguards, bias detection, explainability assistance and continual monitoring. - Integration with security / privacy

Integrate with existing security and privacy controls.

E. Partners

- Open-source

Reference OWASP AI Security and Privacy Guide and NIST AI Risk Management Framework (AI RMF). - Cloud providers

Leverage cloud providers, for example: Microsoft, Google and AWS. - Consultancy

Seek independent consultancy help.

All the above elements require early consideration, design and integration when organisations embark on an AI journey.

What happens when AI acts irresponsibly?

A framework proposed by Prof. Kate Crawford introduces the types of harms could be caused by:

- Harms of Allocation

When the solution allocates or withholds certain groups, an opportunity or resource. For example, only certain profiles of customers are able to obtain business loans. - Harms of Representation

- Stereotyping – e.g. associations between gender pronouns and occupations. A subgroup of loan applicants is deemed to default on loans.

- Recognition – e.g. system could not process darker skin tones.

Failure to recognise someone’s humanity. Does the solution work for YOU?

The bank biometric authentication (using AI) struggles to recognise minorities. - Denigration – e.g. when people use culturally offensive or inappropriate labels. e.g. the stingy ones, the lazy ones. AI assistants may use insensitive languages.

- Under/Over-representation – e.g. an image search of ‘CEOs’ yielded only 1 woman CEO at the bottom of the page. The majority were white male.

AI assistants can only serve certain cases quickly and effectively. - Ex-nomination – e.g. dominant groups become so obvious or common sense that they don’t have to draw attention to themselves by giving themselves a name. They’re just the ‘normality’, against which everything else can be judged. Default cases get expedited and other cases might miss the opportunities.

What’s Next?

Organisations strive to be efficient as AI arrives as a saviour. In the meantime, there are serious consequences if not used with care.

We’d explore how to embrace AI solutions responsibly in Part 2 (keep posted for our next week’s article).

Gain the Knowledge You Need for Responsible AI:

Enhance your understanding with these relevant courses.

Check out our IBF-approved courses! There is no better time to upskill than now!